For a small — very small — master-worker system, there are two players: one master and one worker. The master is the user or the client that requires the worker to get something done, and the worker is the actual processor that does the work.

Let’s break each of these into their various state:

Mater:

1. Work pending

2. Work submitted for completion

3. Work completed and results returned

Worker:

1. Working

2. Work completed

3. Idle and results returned

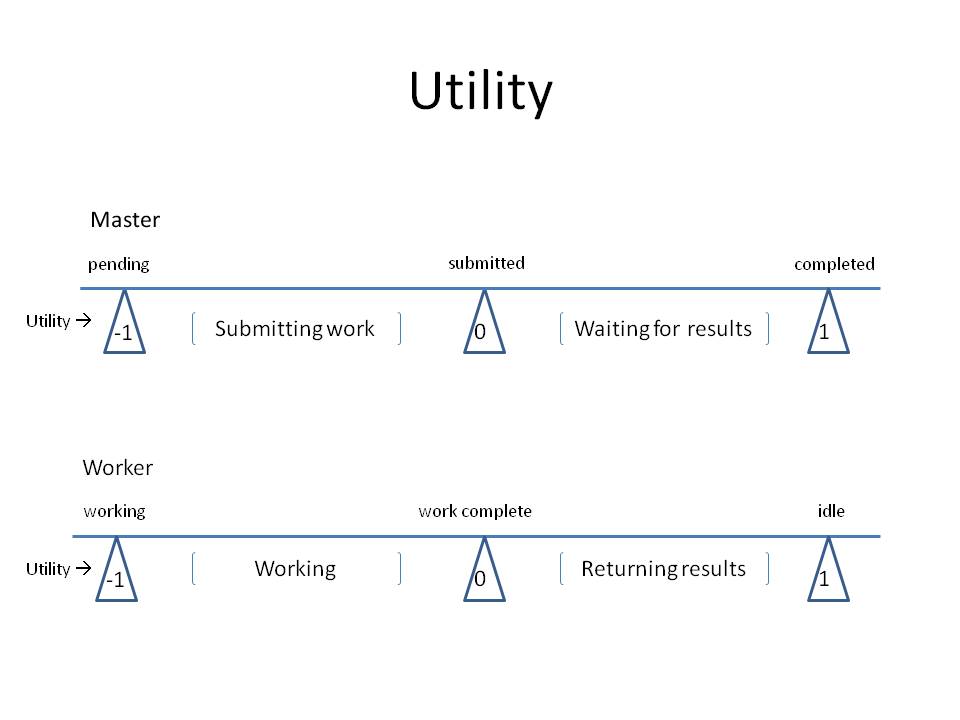

Based on our earlier discussion around utility, we can further assign an arbitrary utility to each of these states. We can then model the utility as a function of workload for the two participants.

you can see that each of the states have been assigned a utility. When the processor is working, it has the lowest utility of -1, and when it is idle, it has a highest utility of +1. For a client, when there is work pending, the client is at its lowest utility, and when all the work has been completed, it has the highest utility.

There is an intrinsic time built into this model. Imagine, if you will, that a given client goes from its lowest utility to its highest utility as more work is submitted and they completed. This obviously is time dependant.

The same is true for the worker; a given CPU is at its lowest when it is working but as it completes the work given to it, it will move towards a higher utility.

Art Sedighi